Canary deployments support

Canary deployment is a way to reduce risk of deploying new software version in production environment - by gradually adding new software instances to production traffic.

In VaaS you can designate specified varnish server as canary varnish. If a director of a service has at least one backend with tag 'canary', then varnish server designated as canary will only have canary backends in this director.

At this point you can test your new version of application by adding canary varnish to production traffic. * For example you can add this varnish to the load balacer with lower ratio then other varnishes.

The second option is testing site by browsing it only through varnish tagged as canary. * For example you can configure your load balancer in such way, that if http request contains the specified header, the load balancer will pass traffic only to the canary varnish server.

Workflow

Setting varnish as canary server

Go to the specified varnish server by clicking in gui:

Cluster -> Varnish servers -> select varnish server -> select "Is canary"

Setting director mode to support canary for his backends

Go to the specified director by clicking in gui:

Manager -> Directors -> select director -> set Mode to Random

Setting backend as canary instance

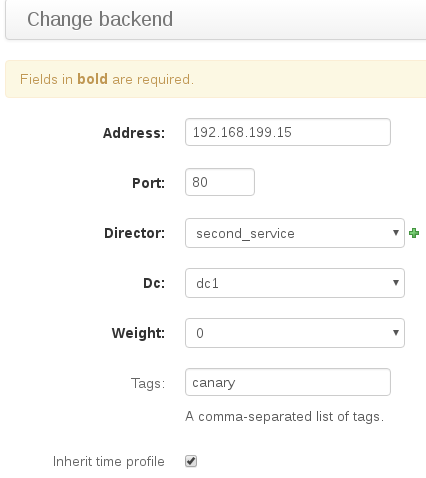

Manager -> Backends -> select backends -> add "canary" (without quotes) to Tags field

Implementation

Below is a part of vcl generated by VaaS for normal varnish server (Is canary not selected). The 'second_service_9_dc1_2_2_80' is a backend with 'canary' tag and weight 0. In this case varnish will pass traffic to the production backends, but not to the canary backend. If you change weight on the canary backend (second_service_9_dc1_2_2_80), varnish will pass traffic to both, the production backends and the canary backend.

backend second_service_2_dc2_2_1_80 {

.host = "127.0.2.1";

.port = "80";

.max_connections = 5;

.connect_timeout = 0.30s;

.first_byte_timeout = 5.00s;

.between_bytes_timeout = 1.00s;

.probe = second_service_test_probe_1;

}

director second_service_dc2 random {

{

.backend = second_service_2_dc2_2_1_80;

.weight = 1;

}

}

backend second_service_3_dc1_2_1_80 {

.host = "127.4.2.1";

.port = "80";

.max_connections = 5;

.connect_timeout = 0.30s;

.first_byte_timeout = 5.00s;

.between_bytes_timeout = 1.00s;

.probe = second_service_test_probe_1;

}

backend second_service_9_dc1_2_2_80 { # <--------------------- canary backend

.host = "127.4.2.2";

.port = "80";

.max_connections = 5;

.connect_timeout = 0.30s;

.first_byte_timeout = 5.00s;

.between_bytes_timeout = 1.00s;

.probe = second_service_test_probe_1;

}

director second_service_dc1 random {

{

.backend = second_service_3_dc1_2_1_80;

.weight = 1;

}

{

.backend = second_service_9_dc1_2_2_80; # <--------------------- canary backend

.weight = 0;

}

Below is a part of vcl generated by VaaS for canary varnish server (Is canary selected). In this case, only backend tagged as 'canary' occurred in the service's director. The 'second_service_9_dc1_2_2_80' is a backend with 'canary' tag and weight 0. In this case varnish will pass traffic to the canary backend. If a canary backend has weight set to 0 in VaaS, it will be automatically changed to 1 in the VCL code generated for the canary varnish server (otherwise varnish would not pass traffic to that backend).

backend second_service_9_dc1_2_2_80 { # <--------------------- canary backend

.host = "127.4.2.2";

.port = "80";

.max_connections = 5;

.connect_timeout = 0.30s;

.first_byte_timeout = 5.00s;

.between_bytes_timeout = 1.00s;

.probe = second_service_test_probe_1;

}

director second_service_dc1 random {

{

.backend = second_service_9_dc1_2_2_80; # <--------------------- canary backend

.weight = 1; # <--------------------- wieght changed from 0 to 1

}

}